Woman Frantically Drives To Find Her Brother Who Called While Getting In A Wreck— Only To Find Out It Was An AI Scam Call That Cloned His Voice

Look out for scammers using AI voice cloning technology!

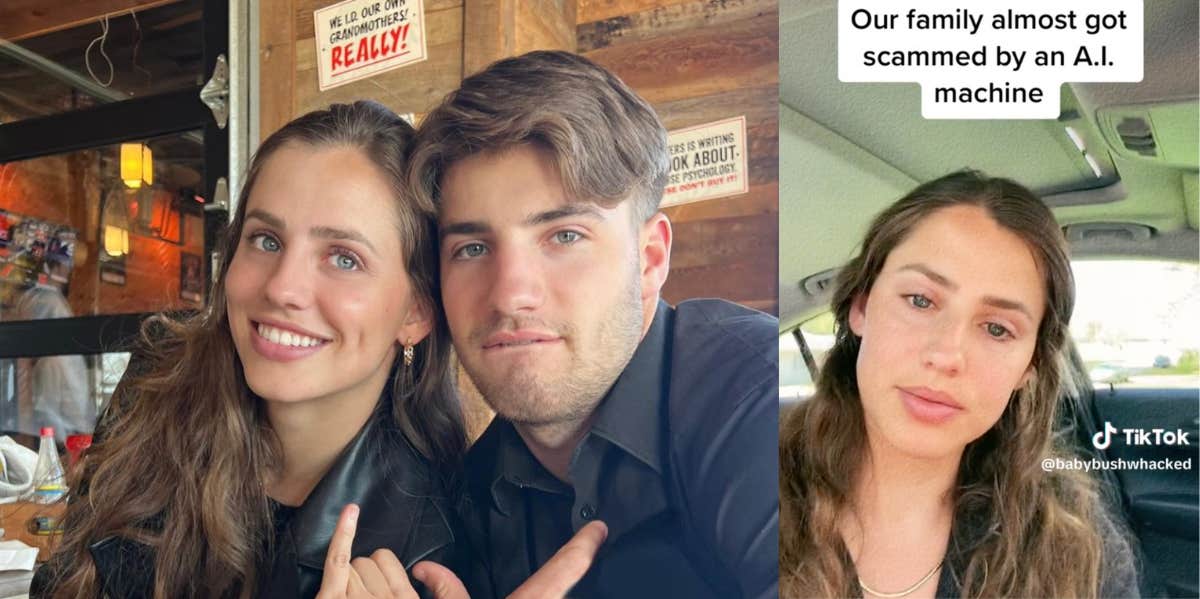

Instagram & TikTok

Instagram & TikTok While scam calls have always been a nuisance, most people are able to easily recognize them as fraud and hang up.

However, new technology is making it way harder to recognize a scam call. Scammers are now using artificial intelligence to swindle people out of money by sounding exactly like a friend or family member in trouble.

One woman was left distraught after thinking her brother had died because of the scam call that her grandfather received.

A recent target of the scam was the grandfather of a popular YouTuber, Brooke Bush, who posts regular vlogs with family members on her YouTube channel.

Her grandfather received a call that sounded like her little brother saying he was about to get into a wreck. Then the phone cut off. After Brooke was relayed the news from her grandfather, she frantically drove around looking for her brother—fearing he was dead because he wasn’t picking up his phone.

She posted a video explaining what happened on TikTok to spread awareness and warn her followers not to fall for the scam.

“So I look like an emotional wreck right now because I’ve been crying for the past two hours because I thought my little brother was dead,” she says in the video. “Somebody out there used an AI machine to trick my grandpa into thinking my little brother got in a wreck.”

“I came to find out that it was a scammer that was trying to get money from my grandpa by calling and saying that he went to jail and he killed someone and he needed bail money.”

“All for money he acted like my little brother almost died,” she goes on. “How evil.”

Commenters poured in with personal stories and shared advice on how to tell if a call is fake.

An alarming amount of people commented saying their relative had recently received a fake call for bail or ransom money. “This happened to my dad. Someone called him with my voice saying I was kidnapped,” wrote one user.

“Same thing happened to my aunt, my cousin was sobbing on the phone BEGGING, etc it was all AI that copied her voice in a band video from HIGH SCHOOL,” said another.

Scam callers are using A.I. technology to clone a voice from just a short clip.

The Federal Trade Commission issued a statement last month warning people of calls using voice clones generated by artificial intelligence. All a scammer needs to clone a voice is a short audio clip— which could be taken from content posted online or from a previous call to your phone— and a voice-cloning program.

The scammers are targeting older generations who may be less aware of the new technology. Grandparents are receiving panicked calls from what sounds like their grandchildren— who say they have wrecked their car, landed in jail, or need them to send money.

A great way to verify if a call is real is to create a safe word with family members. One user explained, “My family and I have created a code word in case of situations like this. if they get a call and that word isn’t said then they’ll know it’s not real.”

If you get a call from a relative asking for money yet don’t have a safe word, don’t trust the voice. Hang up and call the person who supposedly contacted you to verify the story.

Maddie Haley is a writer for YourTango's news and entertainment team. She covers pop culture and celebrity news.